Auditing Website Content at Scale with AI

LLMs are super powerful at auditing content with the right prompting. Check out how we utilized this advantage at scale with thousands of pages.

Regularly auditing website content is crucial for maintaining the quality and relevance of medium to large websites. However, manually reviewing hundreds of pages can be an incredibly tedious and time-consuming task. Some of the key problems with manual content auditing include:

It's a slow and labor-intensive process

It's prone to human error and inconsistencies

It's difficult to maintain a consistent quality standard across all pages

It's challenging to identify and prioritize areas for improvement

In this article, we'll explore how Large Language Models (LLMs) can streamline the content auditing process, and provide a step-by-step guide on automating content auditing using the Moonlit platform.

Content Auditing using traditional language analysis techniques

Traditional methods for analyzing text, such as n-grams and basic Natural Language Processing (NLP) techniques, have been used for content auditing in the past. These approaches can identify keywords, analyze sentiment, and extract basic entities from the text. However, they have limitations when it comes to understanding the context, coherence, and overall quality of the content.

Harnessing the Power of LLMs for Efficient Content Auditing

LLMs, such as GPT-3 and LLaMa, have revolutionized the field of Natural Language Processing. These models are trained on vast amounts of data and can understand and generate human-like text with remarkable accuracy. When applied to content auditing, LLMs can:

Understand the context and meaning of the content

Assess the relevance and usefulness of the information

Identify areas for improvement in terms of clarity, structure, and readability

Provide specific suggestions for optimization

Choosing the right model

When selecting an LLM for content auditing at scale, several factors should be considered:

Cost: Different LLMs have varying pricing models. It's essential to choose a model that fits your budget, especially when processing hundreds of pages.

Speed: The time taken to process each page impacts the overall efficiency of the auditing process. Faster models can significantly reduce the total time required.

Quality: The accuracy and reliability of the LLM's output directly influence the effectiveness of the content audit. Models with higher quality output should be preferred.

Context Window: The maximum input size that the model can process is crucial. Models with larger context windows can handle longer pages without the need for truncation or splitting.

How to Automate Content Auditing for your website using Moonlit

While LLMs offer a powerful solution for content auditing, implementing them in production at scale presents several challenges:

API rate limits can restrict the number of pages that can be processed within a given timeframe

The cost of using LLMs can quickly add up when processing a large number of pages

Developing a custom solution requires significant upfront development effort

Moonlit provides a no-code platform that simplifies the process of automating content auditing using LLMs. Let's walk through the steps to set up an automated content auditing workflow using Moonlit.

Step 1: Preparing the data

To begin, you'll need a list of page URLs that you want to audit. You can manually create a CSV file with a column named 'loc' containing the URLs, or you can use an online XML sitemap to CSV converter to extract all URLs from your website's sitemap.

Here's an example of how your CSV file might look:

Alternatively, you can use Google Search Console to export a list of your website's pages. To do this:

Go to Google Search Console and select your website

Navigate to the "Pages" tab under "Index"

Click on "Export" to download a CSV file containing your website's pages

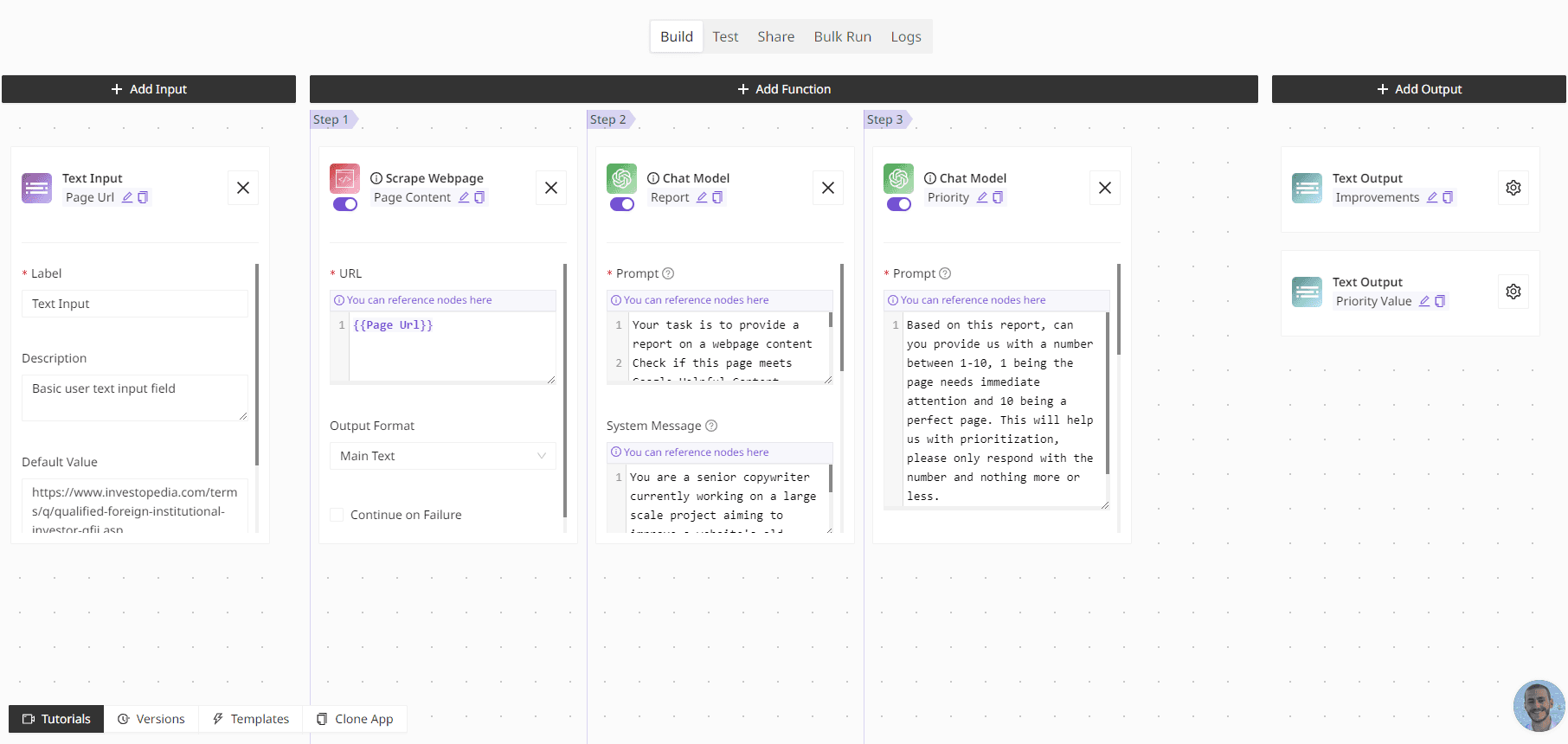

Step 2: Building the Workflow

With Moonlit's intuitive no-code App Editor, creating a content auditing workflow is simple. Our app will consist of two main steps:

Scrape the content from a given page URL

Pass the scraped content through an LLM prompt to assess it against Google's "helpful content guidelines"

The prompt we'll be using for the LLM has been carefully crafted and tested by AI SEO specialist Jonathan Boshoff:

We've also added another LLM step after this responsible for assigning a 1-10 priority value, with the prompt:

You can find out more about prompt chaining in Moonlit in this guide. But essentially, we're just ticking the Include Message History box in the first LLM and then referencing it in the Message History field of the second LLM, that does mean the first LLM will output a list of messages, so to only get the report in our output we can use the "dot notation" (ex. {{first_llm.1.content}}) where "1" is the index of the message, index "0" would be our prompt.

Model Choice

Considering the factors mentioned earlier, we opted for LLaMa 3 as our LLM of choice. LLaMa 3 offers a good balance of speed and quality at a reasonable cost, making it suitable for processing a large number of pages. If you're dealing with a smaller set of pages (e.g., less than 20), you might consider using a more powerful model like Claude 3 or GPT-4 for even better results.

Feel free to clone the app into your own Moonlit project and customize it to fit your specific needs and test with different models.

Step 3: Run it at Scale

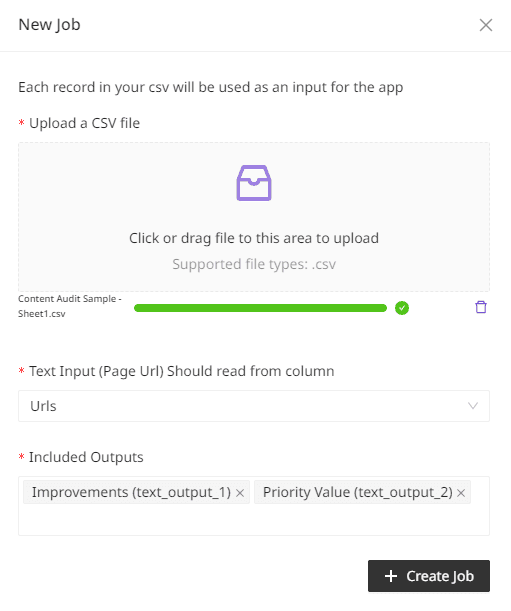

With our app tested and ready, it's time to process our entire list of pages using Moonlit's "Bulk Runs" feature.

Create a new Job

In the Bulk Runs tab, click on "New Job" and upload the CSV file containing your page URLs. Map the 'loc' column to the corresponding input field in the app.

Start Job

Once your data is loaded, click on "Start Job" to begin the content auditing process. Moonlit will execute the app for each row in your CSV, processing 5 rows simultaneously. You can continue working on other tasks while the job runs in the background. Upon completion, you'll receive an email notification with the results.

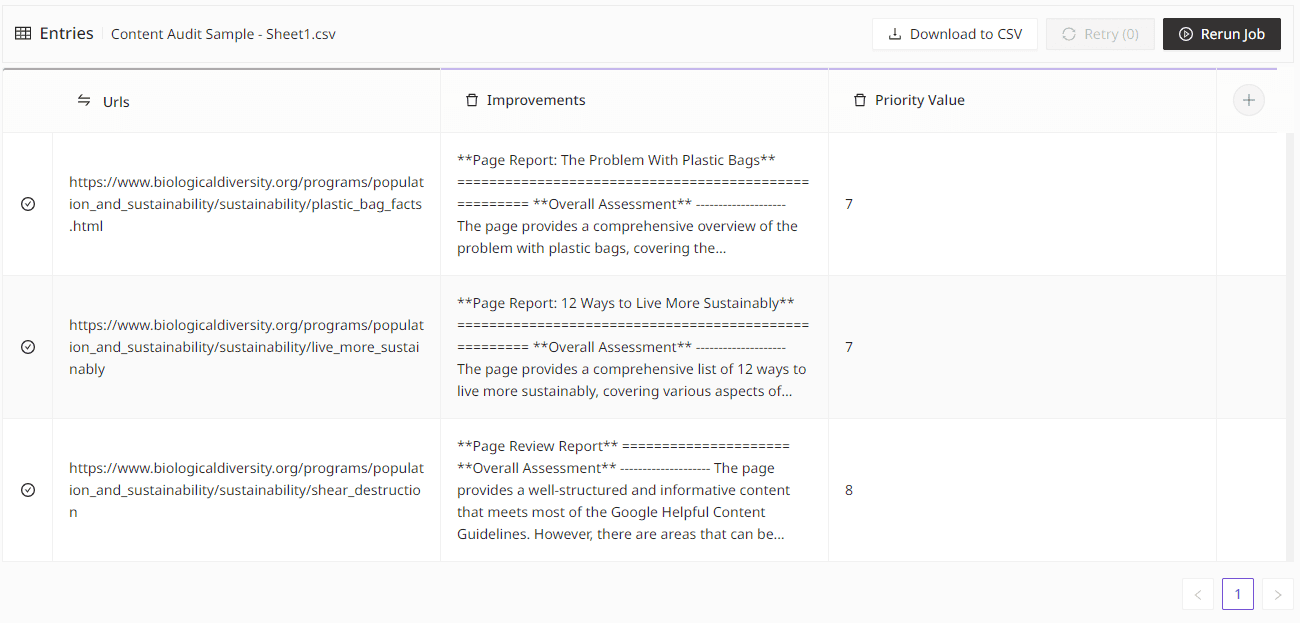

The screenshot above is only for a sample run. To use this data efficiently, you can download the CSV and then sort the pages by priority level (1 being highest priority, and 10 being lowest), then for each page, read the report and try to apply the recommended improvements.

Streamlining Content Audits with Moonlit and LLMs

In this article, we've explored the challenges of manually auditing website content and how LLMs can significantly streamline the process. By leveraging Moonlit's no-code platform, you can easily create and run a content auditing workflow at scale, saving time and resources while ensuring a consistent quality standard across your website.

Ready to take your content auditing to the next level? Sign up for Moonlit today and start automating your content workflows with the power of LLMs!