Detect and Improve Low CTR Pages Using AI

Use Google Search Console data and detect low CTR pages with high impression and using ChatGPT to suggest improved meta title and descriptions for each page to improve CTR

Click-through rate (CTR) is a critical metric in search engine optimization (SEO) that measures the percentage of users who click on a webpage after seeing it in the search results. A low CTR can negatively impact a website's search engine rankings, resulting in reduced organic traffic and potential revenue loss. Traditionally, identifying and improving low CTR pages has been a manual and time-consuming process. However, with the advent of artificial intelligence (AI), SEO professionals now have powerful tools to automate this process and optimize their websites content more efficiently.

In this blog post, we will explore how AI can be used to improve low CTR pages at scale, enhancing overall website performance and driving more organic traffic. We will delve into the technical aspects of how we built a tool using the Moonlit Platform to achieve this goal. If you're interested in learning more about the technical implementation, click here to jump to that section.

The Importance of CTR in SEO

CTR is a vital indicator of a webpage's relevance and appeal to users. Search engines, such as Google, use CTR as a ranking factor, rewarding pages with higher CTRs and demoting those with lower ones. A high CTR suggests that users find the page title and meta description compelling, and the content relevant to their search query. Conversely, a low CTR may indicate that the page title and meta description are not effectively communicating the page's value, or the content does not meet user expectations.

Strategies to Improve Low CTR Pages

Content Optimization Techniques

One of the primary strategies to improve low CTR pages is to optimize the page title and meta description. AI can analyze the existing content and suggest more compelling and relevant titles and descriptions that better align with user search intent and encourage clicks. Additionally, AI can recommend changes to the page content itself, such as improving readability, adding relevant keywords, and structuring the content to better meet user expectations.

User Experience Enhancements

In addition to content optimization, AI can also recommend user experience improvements to increase CTR. This may include optimizing page load times, ensuring mobile-friendliness, and improving website navigation. By providing a seamless and engaging user experience, websites can encourage users to click through from the search results and explore the site further.

Tools and Resources

AI Tools for SEO

There are numerous AI-powered tools available to help SEO professionals optimize their websites and improve CTR. These tools often integrate with popular SEO platforms, such as Google Search Console and Google Analytics, to provide actionable insights and recommendations. Some popular AI tools for SEO include:

Moonlit Platform: A no-code platform that allows users to build and share AI-powered SEO and content tools.

Clearscope: An AI-driven content optimization platform that helps create relevant and high-ranking content.

MarketMuse: An AI-powered content intelligence platform that provides insights and recommendations for content optimization.

Final Thoughts and Recommendations

Automatically detecting and improving low CTR pages using AI is a powerful strategy for enhancing website performance and driving organic traffic. By leveraging AI algorithms to analyze search performance data, identify patterns, and provide targeted recommendations, SEO professionals can optimize their websites more efficiently and effectively.

To get started with AI-driven CTR optimization, we recommend:

Identifying AI tools that integrate with your existing SEO platforms.

Conducting a thorough analysis of your website's search performance data to identify low CTR pages.

Implementing AI-driven optimizations, such as content optimization and A/B testing, on a subset of low-performing pages.

Monitoring the results and iterating on your optimization strategy based on the data.

By embracing AI in your SEO efforts, you can unlock new levels of search performance and stay ahead of the competition in an increasingly data-driven digital landscape.

How We Built It

We recently added the Google Search Console integration to Moonlit and were excited to explore what SEO use cases we can utilize it for. So I went to our Search Console account and started looking for what the data can tell us to improve. I noticed some pages were ranking but with very low CTR, and the first thing to improve for a better CTR is the title and meta descriptions.

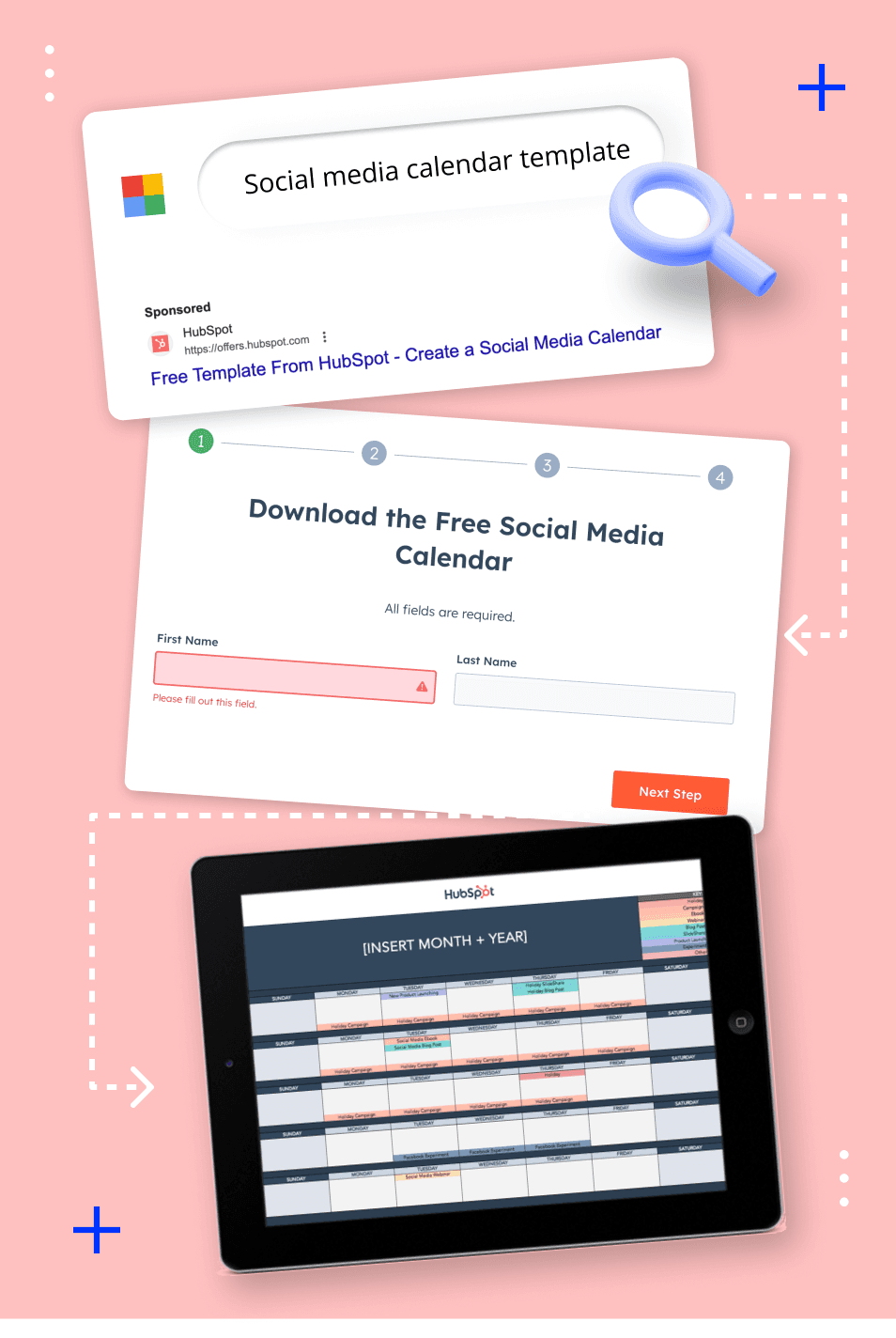

My idea here is to automate the process of first finding these pages with low CTR but high impressions, and then have an AI model look over the meta title and descriptions for these pages and suggest new ones to improve the CTR.

Fetching & Preparing the Data

I started by adding a Text Input that will serve as the GSC Property, which will be our own website moonlitplatform.com for testing. In the logic section, I've added the GSC function with the 'page' dimension along with a custom Python Function that will take the GSC data and sort it by lowest CTR to highest impression pages.

Note that I've made this only for the top 3 pages. However, this automation can be used for a sitewide audit, instead of the top 3 pages it can be the top 10% of pages with the lowest CTR and highest impressions. To do that, check out the last section of this article.

So now that we have our low CTR, high impression pages, we can go over these page's meta titles and descriptions, pass them along to ChatGPT to analyze and suggest improved metas that are likely to improve CTR.

Passing It to ChatGPT to Suggest Improvements

The process for that would involve first retrieving the homepage to give ChatGPT context over the website and its intended audience. In the same step, we'll also scrape the target page to improve, including its content, title, and description. Then we'll pass all this information to ChatGPT to suggest an improved version of the metas for the page.

Since we'll be doing this for 3 pages, instead of implementing this logic three times, I will create a separate app called 'Improve Metas' to handle that functionality. Then I'll deploy it as a function and use it in the original app for each page.

To do that, I've created the new app and started by adding two inputs; one for taking the target page URL and one for passing a site description. The latter will help our AI model understand the intended audience.

You can clone this App "Improve Metas" if you want to check out the code and its logic.

In the first two steps here, I've used the Scrape Webpage function to return the full HTML of the page. Then in the Custom Python snippet, I've processed this HTML string using the BeautifulSoup library to extract the metas and the page text content.

After we have the required data, I've passed it all to our AI model to generate the final outcome. Again, feel free to clone the app to view or change the prompt.

Now that this app is functional, we can deploy it as a function to use in our original app.

Remember in the first section of this article we stopped on preparing the GSC data. Now that we have our function for improving metas, I've added 3 for each one of our pages. I also added another text input for capturing the website description from the user and passed it to the 'Website Summary' field in our 'Improve Metas' function.

Testing

In the outputs, I've included a table to display the 3 pages we're working to improve along with a text output to display each page's old and new suggested metas.

Running It at Scale

That sums up the functionality of our tool, but what if we have a website with thousands of pages and maybe we want to get the top 100 instead of just top 3. So in steps, here's what you need to do to run this functionality on any amount of pages:

Prepare the data by running this app and downloading the resulting table. Just fill in the number of pages and property to extract the data from.

Clone this App and go to the 'Build' section. Delete the 'Site Description' input and write it manually in the Chat Model prompt. Just replace the reference to the input ID ({{text_input_37732}}) with the actual website summary.

Go to the bulk run tab and upload the CSV you downloaded from step one. Map the 'page' column to the input and include the 'Suggested Metas' output.

Start the job and download it once it's done.

By following these steps, you can scale the AI-driven CTR optimization process to handle a large number of pages, ensuring a comprehensive and efficient analysis of your website's search performance.